Нейронная языковая модель. Векторы слов.¶

%matplotlib inline

from IPython.display import set_matplotlib_formats

set_matplotlib_formats('pdf', 'svg')

from sklearn.datasets import fetch_20newsgroups

import numpy as np

import gensim.parsing.preprocessing as gp

import nltk

from sklearn import feature_extraction, metrics

from sklearn import naive_bayes, linear_model, svm

from sklearn.preprocessing import Binarizer

from keras import models, layers, utils, callbacks, optimizers

from itertools import chain

import json

Языковая модель задает распределение вероятности над строками языка. Если строки состоят из слов (есть также посимвольные модели), то по сути модель задаёт распределение вероятностей вида $P(w_1,w_2,...,w_n)$, где $n$ - длина строки. Типичная модель описывает распределение $P(w_i~|~w_1,...,w_{i-1})$, тогда $P(w_1,w_2,...,w_n) = P(w_n~|~w_1,w_2,...,w_{n-1})P(w_1,w_2,...,w_{n-1})$.

Марковские модели $k$-го порядка упрощают распределение $P(w_i~|~w_1,...,w_{i-1})$ до $P(w_i~|~w_{i-k-1},...,w_{i-1})$, т.е. вероятность следующего слова зависит только от $k+1$ предыдущих слов. Они также называются n-gram моделями. Модель нулевого порядка называется униграм моделью, первого - биграм моделью, второго, третьего и четвертого - триграм-моделью, 4-грам, 5-грам и т.д.

Языковые модели активно применяются в задачах интерпретации, например, при распознавании речи. Аналогично можно искать наиболее вероятное исправление текста, наиболее вероятный перевод фразы. Мы часто сталкиваемся с языковыми моделями когда набираем текст на телефоне.

Разумеется, сэмплирование из языковой модели позволяет генерировать текст. В зависимости от типа модели и данных, на которых она натренирована, данный текст будет в большей или меньшей степени похож на "настоящий".

В этой тетради мы построим модель, натренированную на форумных сообщениях из набора данных 20 newsgroups.

Разумеется, сэмплирование из языковой модели позволяет генерировать текст. В зависимости от типа модели и данных, на которых она натренирована, данный текст будет в большей или меньшей степени похож на "настоящий".

В этой тетради мы построим модель, натренированную на форумных сообщениях из набора данных 20 newsgroups.

train_data = fetch_20newsgroups(subset='train',remove=['headers', 'footers', 'quotes'])

test_data = fetch_20newsgroups(subset='test',remove=['headers', 'footers', 'quotes'])

text = train_data.data[0]

print(text)

Поскольку наша задача - генерировать более-менее натуральный текст, мы воспользуемся куда более аккуратной токенизацией из библиотеки NLTK, по сравнению с грубой обработкой в прошлом туториале. Единственное, текст все равно переведен в нижний регистр и соотв. генерироваться будет аналогично, это сделано, чтобы немного уменьшить объём данных для тренировки и объём словаря.

def tokenized(documents):

def process_document(doc: str):

words = nltk.tokenize.word_tokenize(doc)

return [w.lower() for w in words]

return [process_document(doc) for doc in documents]

tokens_train = tokenized(train_data.data)

Мы используем и тренировочные и тестовые данные из задачи классификации для тренировки модели.

tokens_train.extend(tokenized(test_data.data))

print(tokens_train[0])

Назначим каждому слову номер, используя CountVectorizer. Поскольку эта тетрадь запускалась несколько раз с целью дотренировки модели, было решено сохранить полученный словарь в файл и загружать его, поскольку я не уверен в детерминированности CountVectorizer. Поскольку тренируется 4-грам модель $P(w_4|w_1,w_2,w_3)$, введены специальные символы для начала текста <S>, конца </S>. Поскольку словарь модели будет ограничен, все слова встречающиеся менее 40000 раз будут заменены на спец. слово <UNK>. Вообще говоря, это спец. слово можно разнообразить, например, ввести слова <UNK_NOUN>, <UNK_VERB>, соотв. частям речи, но здесь мы этого не делаем.

# count_vectorizer = feature_extraction.text.CountVectorizer(preprocessor=lambda x:x,

# tokenizer=lambda x:x, max_features=40000)

# count_vectorizer.fit(tokens_train)

# feature_names = count_vectorizer.get_feature_names()

# feature_names.append('<UNK>')

# feature_names.append('<S>')

# feature_names.append('</S>')

# with open('nltk_feature_names4_wm.json','w+') as of:

# json.dump(feature_names,of)

Откроем файл со словарем (списком слов). Используя этот список назначим каждому слову номер (индекс в списке). Преобразуем все тексты (списки слов) в списки номеров этих слов.

with open('nltk_feature_names4_wm.json') as f:

feature_names = json.load(f)

vocab = {v:i for i,v in enumerate(feature_names)}

unk_index = vocab['<UNK>']

n_words = len(feature_names)

end_index = vocab['</S>']

start_index = vocab['<S>']

ids_train = []

ids_test = []

for row in tokens_train:

ids_train.append([vocab.get(word, unk_index) for word in row])

Полученные тексты в виде списков номеров разобъём на четвёрки (4-грамы) и поместим их в единый массив, выведем первые 10 элементов. Всего в массиве 4760679 n-gramов

ngrams = []

for row in ids_train:

for ngram in nltk.ngrams(row,4,pad_left=True, pad_right=True,

left_pad_symbol=start_index, right_pad_symbol=end_index):

ngrams.append(ngram)

print(ngrams[:10])

print(len(ngrams))

Преобразуем в numpy формат.

ngrams = np.array(ngrams)

print(ngrams.shape)

В качестве модели распределения $P(w_4|w_1,w_2,w_3)$ будем использовать нейронную сеть. Есть более простые, собственно n-gram модели, которые оценивают эти вероятности через относительные частоты и сглаживание (аналогично описанному в прежней тетради Наивному Байесовскому классификатору). Они тренируются очень быстро, но при этом потребляют много памяти. Выбор нейронной сети обусловлен некоторыми интересными свойствами получаемых моделей, а также их более высоким в целом качеством. Качество модели замеряется как правило через перплексию и кросс-энтропию - среднюю неопределенность следующего слова при известных предыдущих (меньше - лучше).

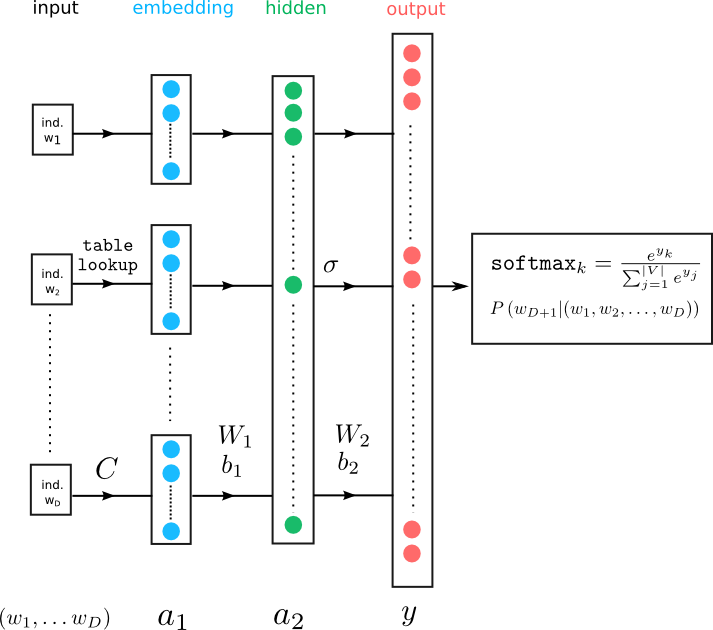

Модели на вход поставляются 3 слова в виде one-hot векторов, т.е. 40000-мерных векторов, в которых все элементы, кроме одного равного единице, равны нулю. Для каждого из трех слов используется одна и та же матрица весов, которая умножается на этот вектор, результат равен одному из её столбцов. Этот столбец называется вектором слова, а само преобразование категориальной переменной в вектор - встраиванием (embedding). Матрица весов соотв. называется встраивающей матрицей (Embedding матрицей). Полученные три вектора конкатенируются и подаются на скрытые слои сети, которые производят различные преобразования над ними. Последний слой использует функцию softmax для создания дискретного распределения вероятности над 40000 словами.

Сети нужны one-hot векторы в качестве эталонных выходов, и преобразовать все выходы в них сразу было бы убийственно по памяти, поэтому генерироваться экземпляры для тренировки будут налету. Сеть будет тренироваться пачками экземпляров фиксированного размера и перед каждой итерацией эти пачки будут генерироваться нижеописанной функцией. Три номера с каждого экземпляра попадают в матрицу $X$, а последний номер преобразовывается в one-hot вектор и попадает в матрицу $y$.

def make_batch(batch_size=128):

while True:

indices = np.random.randint(0, len(ngrams),size = batch_size)

rows = ngrams[indices]

X = rows[:,:-1]

labels = rows[:,-1]

y = utils.to_categorical(labels, num_classes=n_words)

yield X,y

XX,yy = next(make_batch())

print(XX.shape, XX.dtype)

print(yy.shape)

Построим сеть. Её первый слой задает для каждого слова обучаемый вектор размером 130. Далее эти векторы конкатенируются и подаются на следующий слой из 400 элементов, с кусочно-линейной активацией. Далее есть спец.слой нормализации, который проводит простое преобразование данных так, чтобы их среднее было близко к 0, а стандартное отклонение к 1. Нормализация данных часто ускоряет обучение сети, хотя я уже не помню, помогла ли она здесь. В любом случае этот слой не навредил. Последний слой имеет размерность 40003 и на его выходе распределение вероятностей (т.е. его выход суммируется в 1). softmax активация дает модели гибкость в приближении выхода по форме к one-hot вектору (см. введение в sklearn про softmax).

model = models.Sequential()

model.add(layers.Embedding(input_dim=n_words,output_dim=130, input_length=3))

model.add(layers.Flatten())

model.add(layers.Dense(400))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dense(units=n_words, activation='softmax'))

optimizer = optimizers.Adagrad()

model.compile(optimizer,loss='categorical_crossentropy')

# model = models.load_model('language_model_nltk42best_wm.h5')

Долго и мучительно тренируем модель. Хотя в данном случае использование оптимизатора Adagrad заметно улучшило скорость.

# model.fit_generator(make_batch(128),steps_per_epoch=2000,epochs=50, validation_data=make_batch(128),

# validation_steps=40,

# callbacks=[ callbacks.ModelCheckpoint('language_model_nltk42best_wm.h5',save_best_only=True),

# callbacks.ModelCheckpoint('language_model_nltk42latest_wm.h5')])

# model.fit_generator(make_batch(128),steps_per_epoch=2000,epochs=50, validation_data=make_batch(128),

# validation_steps=100,

# callbacks=[ callbacks.ModelCheckpoint('language_model_nltk42best_wm_cont2.h5',save_best_only=True),

# callbacks.ModelCheckpoint('language_model_nltk42latest_wm_cont2.h5')])

После каждой крупной итерации модель сохранялась и поскольку она уже натренирована, просто загрузим её из файла.

model = models.load_model('language_model_nltk42latest_wm_cont2.h5')

Эта функция возвращает в порядке убывания N наиболее вероятных слов и их вероятность, при заданных 3х предыдущих.

def distr(nn, start_vector, N):

start_vector = np.asarray(start_vector)

pred = nn.predict(start_vector.reshape(1,-1)).ravel()

ml = np.argsort(pred)[::-1]

return [ (index, pred[index]) for i, index in zip(range(N), ml)]

for index, prob in distr(model, [vocab['to'], vocab['travel'], vocab['to']], 10):

print(feature_names[index], prob)

Напишем функцию сэмплирования из модели. Ей на вход подается три предыдущих слова, а генерирует она текст длиной k или пока не будет сгенерирован символ конца текста. Для этого используется взвешенный случайный выбор из 40003 слов. По-умолчанию также исключается генерация символа <UNK>.

def sample_from_model(nn, k, length, seed_vector, generate_unk=False):

results = []

indices = np.arange(len(feature_names))

for i in range(k):

start_vector = np.array(seed_vector)

res = [feature_names[ind] for ind in start_vector]

for _ in range(length):

weights = nn.predict(start_vector.reshape(1,-1)).ravel()

if not generate_unk:

weights[unk_index] = 0

weights /= weights.sum()

next_ind = np.random.choice(indices,p=weights)

start_vector[0], start_vector[1], start_vector[2] = start_vector[1], start_vector[2], next_ind

if next_ind == end_index:

break

res.append(feature_names[next_ind])

results.append(res)

return results

samples = sample_from_model(model, 20, 10, [vocab['to'], vocab['travel'], vocab['to']])

for s in samples:

print(' '.join(s))

samples = sample_from_model(model, 10, 100, [vocab['<S>'], vocab['<S>'], vocab['<S>']])

for s in samples:

print(' '.join(s[3:]))

print('---------')

Вытащим встраивающую матрицу из сети (в Keras таки векторы слов являются строками, а не столбцами этой матрицы)

embedding_layer = model.get_layer(index=1)

weight_matrix = embedding_layer.get_weights()[0]

print(weight_matrix.shape)

Наиболее интересные свойства нейронных языковых моделей - это особенности векторов. А именно, векторы слов, похожих по смыслу, похожи между собой (особенно если использовать для этого косинус угла между ними). Это связано с тем, что похожие по смыслу слова имеют тенденцию встречаться в похожих контекстах. Распределение вероятности над следующим словом зависит от регионов в непрерывном пространстве, в которых находятся векторы текущих слов. Таким образом, если какое-либо слово вероятно в данном контексте, то вероятны и похожие на него слова, даже если в тренировочном наборе данных это похожее слово в данном контексте никогда не встречалось. Именно этим обуславливается более высокое качество нейронных моделей, можно сказать, что они более "креативны". Векторная семантика активно развивается в настоящее время и для получения векторов слов были выработаны более эффективные метода (например, на задаче предсказания слов в окне вокруг входного 1 слова). Однако всё-таки, рассмотрим векторы слов полученных на неспециализированой под них задаче.

word_row = weight_matrix[vocab['science']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:30]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['russia']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['woman']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['sister']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['god']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['keyboard']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['pretty']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['good']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['jpg']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['strcmp']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['jewish']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['gun']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['satan']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['friend']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['clinton']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['car']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['astronomy']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])

word_row = weight_matrix[vocab['mars']]

sims = metrics.pairwise.cosine_similarity(word_row.reshape(1,-1), weight_matrix)

most_similar = np.argsort(sims.ravel())[::-1]

print(sims.shape)

for ms in most_similar[:25]:

print(feature_names[ms], sims[0,ms])